How to Talk to GenAI

TLDR; Great prompting is just good communication. Be clear, give context, and treat AI like a smart assistant, not a mind reader.

Generative AI tools like ChatGPT are wildly powerful, but most people still use them like a search engine: vague questions, unclear goals, and disappointment when the output misses the mark.

The real magic happens when you treat the AI more like a creative collaborator. That starts with learning how to talk to it well.

Let’s break that down.

Give It a Role

One of the simplest upgrades to your prompts: assign the AI a role.

- “Act as a personal chef who plans healthy meals.”

- “You’re a startup founder pitching investors.”

- “Act like a supportive career coach with a dry sense of humor.”

It’s like casting the AI in a scene, it immediately shifts the tone and direction.

Add Context, a Task, and a Format

Strong prompts usually have three parts:

- Earth Context – What’s the situation?

- Complete Task – What should it do?

- Checklist Format – How should it respond?

“I’am working on a new open source project called ‘DataViz’ that helps developers create beautiful data visualizations with just a few lines of code. The project has built-in support for common chart types, real-time updates, and accessibility features. Write an X post announcing the launch. The post should be under 280 characters, include the project name and main features, and end with a call for contributors to join the community.”

This makes your prompt specific, and your output way more usable.

Step-Back Prompting: Zoom Out Before You Zoom In

Insight Step-back prompting means pausing to reflect, reframe, or break down a problem before jumping to a solution, helping improve clarity, reasoning, and outcomes.

Before asking a GenAI model to solve a specific problem, it can be helpful to pause and step back. By first asking a general or higher-level question, you activate the model’s broader reasoning abilities. Then you feed that insight into the more specific prompt.

This technique, called step-back prompting, often results in answers that are more accurate, thoughtful, and well-rounded.

It’s like asking yourself, “What’s the bigger picture here?” before making a decision.

Why Step-Back Prompting Works

LLMs don’t have real understanding, but they’ve been trained on massive amounts of content. When you ask a general principle or foundational question first, it helps the model:

- Tap into more of its internal knowledge

- Avoid biased or overly narrow thinking

- Give deeper, more well-reasoned answers to your real task

Example

Task: You’re drafting a policy for your team around AI use at work. You want the model to help write a short, clear list of dos and don’ts.

Not allowed Direct Prompt:

Write a list of dos and don’ts for how my team should use AI tools at work.

The list might be surface-level or inconsistent, like:

- Do use AI to draft emails

- Don’t use AI for anything sensitive

- Do double-check AI content

- Don’t copy-paste from ChatGPT directly

Complete Step-Back Prompt:

Step 1: Ask a broader question first

What are the general principles for using AI responsibly in a professional setting?

Step 2: Feed the result into your actual task

Based on the principles below

…insert answer from step 1… Write a concise, practical list of dos and don’ts for my team using AI at work.

Now the list might look more values-driven and consistent:

- Do ensure human review for all AI-generated content

- Don’t let AI make decisions without oversight

- Do follow data privacy policies

- Don’t use AI for tasks requiring original thought or judgment

Mind LLMs can also help improve your own prompts. Ask the model to find ambiguity or inconsistencies, it’s surprisingly good at making vague prompts sharper.

Meta-Prompting: Let the AI Help You Prompt

Insight Meta-prompting is the practice of asking the AI to help you write your prompt.

Yes, really.

You prompt the AI to write a prompt, or to improve, rephrase, or optimize one for a clearer, more effective output.

This may sound circular, but it’s incredibly useful. Why?

Because sometimes:

- You’re not sure how to phrase what you want

- You need help making your prompt more specific or structured

- You want to explore different styles or outputs but don’t know how to ask

And GenAI is surprisingly good at helping with… GenAI!

Meta-Prompting in Action

Let’s say you want help writing a bio for your team’s website, but your original prompt is kind of vague:

Write a short bio about ACME.com.com

You can meta-prompt the model with:

Help me write a more specific prompt that will generate a clear, engaging bio for ACME.com.com. I want it to sound approachable but confident. The audience is potential clients and partners.

And it might return something like:

Write a professional bio for ACME.com.com that introduces the company to potential clients and partners. The tone should be approachable yet confident, reflecting a team that’s both expert and easy to work with. Clearly explain what ACME.com does, who it helps, and what makes it stand out. Include any noteworthy achievements, unique methods, or values that guide the work. Keep the language concise, engaging, and free of jargon. Aim for around 100–150 words.

Now that’s a strong prompt, and you didn’t have to write it from scratch.

AI Is a Master Chef (That’s Never Tasted the Food)

Think of a large language model (LLM) like a master chef who’s read every recipe in the world, but never actually cooked.

It doesn’t know what “spicy” tastes like, but it knows what people usually mean by spicy chicken.

It’s remixing patterns, not inventing from scratch.

Insight It’s like a parrot that’s read every book, smart at mimicking, not understanding.

It’s Also Fancy Autocomplete

An LLM is basically autocomplete on steroids. You give it a prompt, and it tries to guess the next most likely word, then the next, and the next…

That’s it. It’s guessing, not thinking.

Which is why…

AI Doesn’t “Know” Things

It doesn’t have beliefs or access to live facts. It just predicts. Which means:

- It may sound confident, even when it’s wrong

- It can make up facts (aka hallucinate)

- It doesn’t check its work

And It’s Trained to Guess Anyway

LLMs are trained to respond, not to admit they’re confused.

Because human reviewers tend to prefer “helpful” answers, the model learns to always take a stab, even if the question is ambiguous.

LLMs Can’t Really Do Math

They don’t calculate, they guess.

That’s why some models use external tools like calculators. When you see math working, it’s often the tool doing the work, not the model itself.

They’re Stuck in Time

LLMs don’t know anything after their training cutoff date, unless the tool around them can access live info (like browsing).

Use Role–Context–Audience

When crafting basic prompts, use the R-C-A framework:

- Drama Role – Who should it be?

- Earth Context – What’s going on?

- People Audience – Who’s it for?

Example:

You’re a senior product manager. Write a 3-line Slack update explaining a delay to the engineering team.

Take It Further: Format, Tone, and Constraints

Add even more control with:

- Document Format – List, poem, outline, paragraph

- Music Tone – Casual, formal, bold, warm

- Block Constraints – Word count, no jargon, specific tense

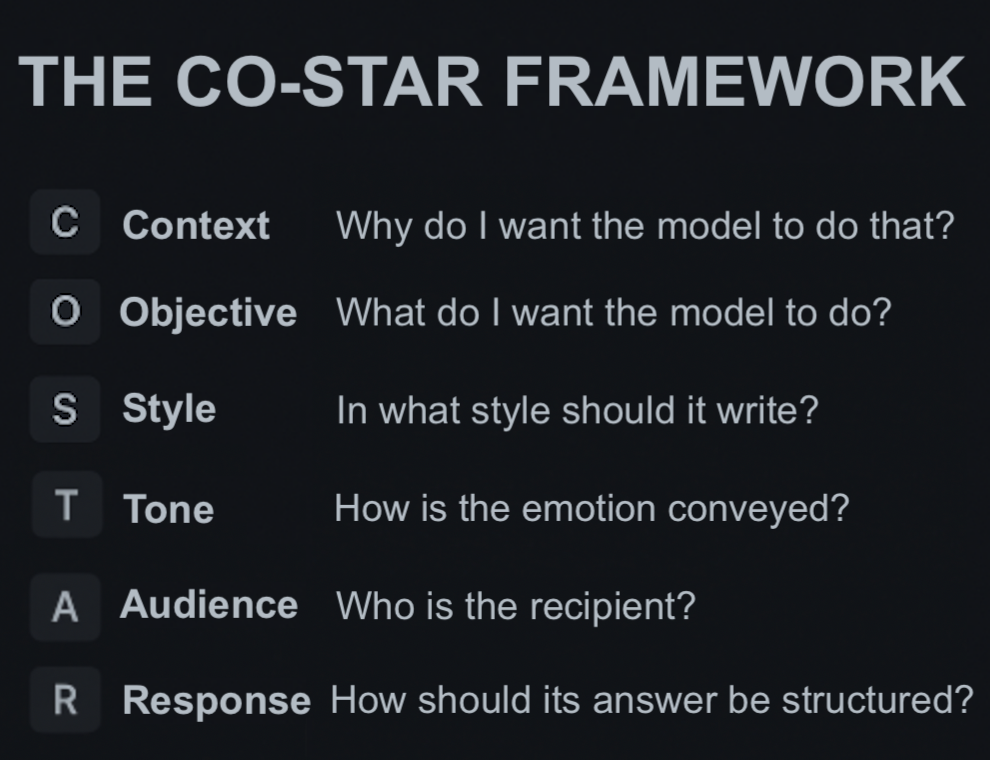

Use Frameworks Like CO-STAR

Frameworks help create consistent, high-quality prompts. One solid one is:

CO-STAR

- Context

- Objective

- Style

- Task

- Audience

- Response

It’s like a creative brief for the AI.

Format Your Prompts Clearly

Structure matters, for both humans and AI.

Use:

- Checklist Headers

- Return Line breaks

- Search Delimiters (

---,\“,`, etc.) - Typography Emphasis (bold/caps)

Example:

# Context

We surveyed 500 users about onboarding.

# Task

Summarize key insights. Suggest 2 improvements.

# Output Format

- Bullet points for insights

- Numbered list for recsChain-of-Thought: Get the AI to Think Out Loud

Ask it to think step-by-step, and it usually gives better, more accurate answers.

Not allowed Without CoT:

What’s 18% of 250?

Response: 45

Complete With CoT:

Let’s solve step-by-step:

18% = 0.18 → 0.18 × 250 = 45

This works because…

LLMs don’t think internally, they generate tokens out loud, one by one.

They need prompting to slow down.

Pro Tip: Even just saying “Let’s think this through step by step” helps.

Newer models like GPT-4o now do this automatically for reasoning tasks.

Final Thoughts

Prompting is a skill, and a surprisingly fun one to build.

You don’t need to memorize templates. Just think like a director:

- What’s the scene?

- Who’s the character?

- Who’s the audience?

- What’s the goal?

Give the AI the right stage, and it’ll put on a great show.